NOTICE-AND-ACTION & MODERATION POLICY

1. Purpose & Scope

This Policy sets out [PLATFORM NAME]’s procedures for receiving, assessing, and responding to illegal content notices, user complaints, and moderation decisions in accordance with the EU Digital Services Act (“DSA”). It applies to all content hosted or disseminated via our Services within the EU, reflecting the baseline obligations that have applied to hosting providers and online platforms since 17 February 2024 (with additional duties for VLOPs/VLOSEs).

2. Contact & Interface

- Single Point of Contact (Authorities/Users): [EMAIL ADDRESS], [ONLINE FORM LINK].

- Legal Representative in the EU: [NAME, ADDRESS].

- Notice Portal: Provide accessible electronic form enabling submission in any official EU language served.

3. Notice Submission Requirements

We encourage users and authorities to submit notices via our Notice Portal. A valid notice should include:

- URL or precise location of content

- Description of why the content is illegal

- Legal basis invoked (e.g., national law article)

- Contact information of the notifier

- Statement of good faith accuracy

- Optional: supporting evidence (screenshots, documents)

Trusted flaggers may submit notices via dedicated API endpoints or channels. Notices from trusted flaggers receive priority handling.

4. Triage & Decision Timelines

- Acknowledgment: Automated acknowledgment within [24 HOURS].

- Assessment: Review illegal content notices within [48 HOURS] or sooner for imminent harm.

- Action: Remove or disable access to illegal content when justified, or decline with explanation.

- Statement of Reasons: Provide reasoned decisions using the standardized DSA format and include information on appeal rights.

5. Measures Applied

Potential actions include removal, geo-blocking, age-gating, demonetization, or account suspension. Measures must be necessary and proportionate. Document the specific legal basis for each action.

6. User Notifications & Appeals

- Notify affected users promptly with the statement of reasons, unless notification would impede law enforcement.

- Provide an internal complaint-handling system accessible for [SIX MONTHS] after the decision.

- Resolve appeals within [14 DAYS].

- Inform users of available out-of-court dispute settlement bodies where applicable.

7. Trusted Flaggers & Repeat Infringers

- Maintain a list of trusted flaggers recognized by Member States.

- Apply enhanced handling to their notices and track accuracy metrics.

- Implement policies addressing repeat infringers, including graduated sanctions and account termination thresholds.

8. Illegal Content Categories & Specialized Workflows

Outline workflows for specific categories (terrorism, child sexual abuse material, IP infringement, consumer fraud). Coordinate with legal requirements and report to authorities as mandated.

9. Transparency Reporting

Publish semiannual transparency reports covering moderation decisions, notice volumes, response times, suspensions, and risk mitigation efforts. Use the Commission’s reporting template where applicable.

10. Systemic Risk Mitigation (VLOP/VLOSE Module)

If designated as a VLOP/VLOSE: conduct annual risk assessments addressing dissemination of illegal content, fundamental rights, civic discourse, and gender-based violence. Implement mitigation measures, independent audits, and provide access to data for vetted researchers.

11. Recordkeeping & Audit

Retain notices, decisions, and statements of reasons for at least [SIX YEARS], ensuring data minimization. Make records available to the European Commission and Digital Services Coordinators upon request.

12. Law Enforcement Requests

Verify authenticity of orders to act against illegal content or provide information. Respond within deadlines specified by the issuing authority and document compliance actions.

13. Moderation Guidelines & Training

- Maintain internal guidelines for moderators on classification, escalation, and cultural/linguistic context.

- Provide regular training and wellbeing support.

- Track accuracy metrics and continuous improvement steps.

14. Policy Review

Review this Policy at least annually or upon significant legal or operational changes. Obtain executive approval for updates and communicate to users.

Annexes

- Annex A: Notice Form Template (multi-language fields)

- Annex B: Statement of Reasons Template

- Annex C: Appeal Handling Workflow

- Annex D: Trusted Flagger API Specification

- Annex E: Transparency Report Data Dictionary

[// GUIDANCE: Coordinate with content moderation partners and trust & safety teams to ensure operational alignment.]

Do more with Ezel

This free template is just the beginning. See how Ezel helps legal teams draft, research, and collaborate faster.

AI that drafts while you watch

Tell the AI what you need and watch your document transform in real-time. No more copy-pasting between tools or manually formatting changes.

- Natural language commands: "Add a force majeure clause"

- Context-aware suggestions based on document type

- Real-time streaming shows edits as they happen

- Milestone tracking and version comparison

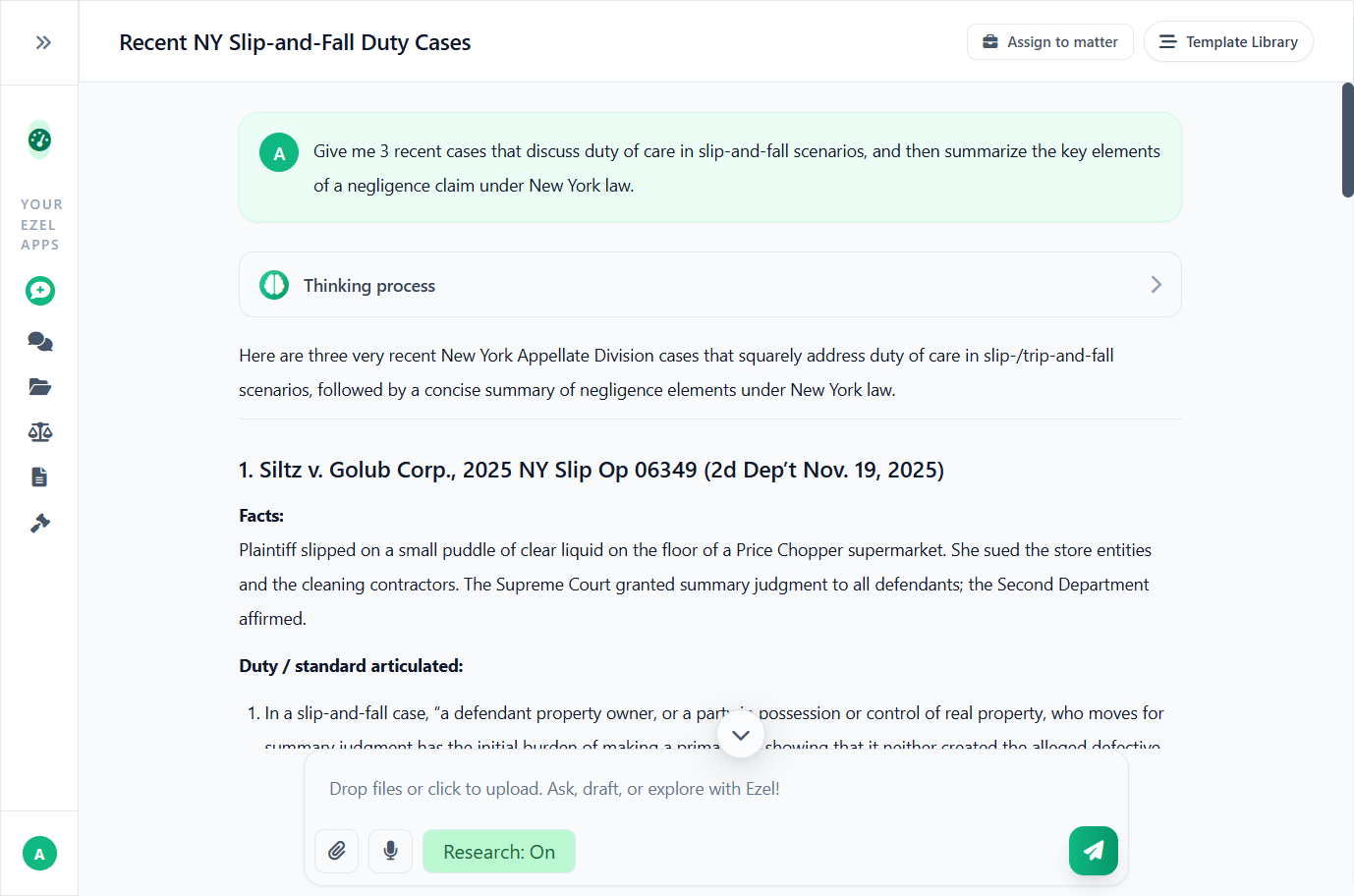

Research and draft in one conversation

Ask questions, attach documents, and get answers grounded in case law. Link chats to matters so the AI remembers your context.

- Pull statutes, case law, and secondary sources

- Attach and analyze contracts mid-conversation

- Link chats to matters for automatic context

- Your data never trains AI models

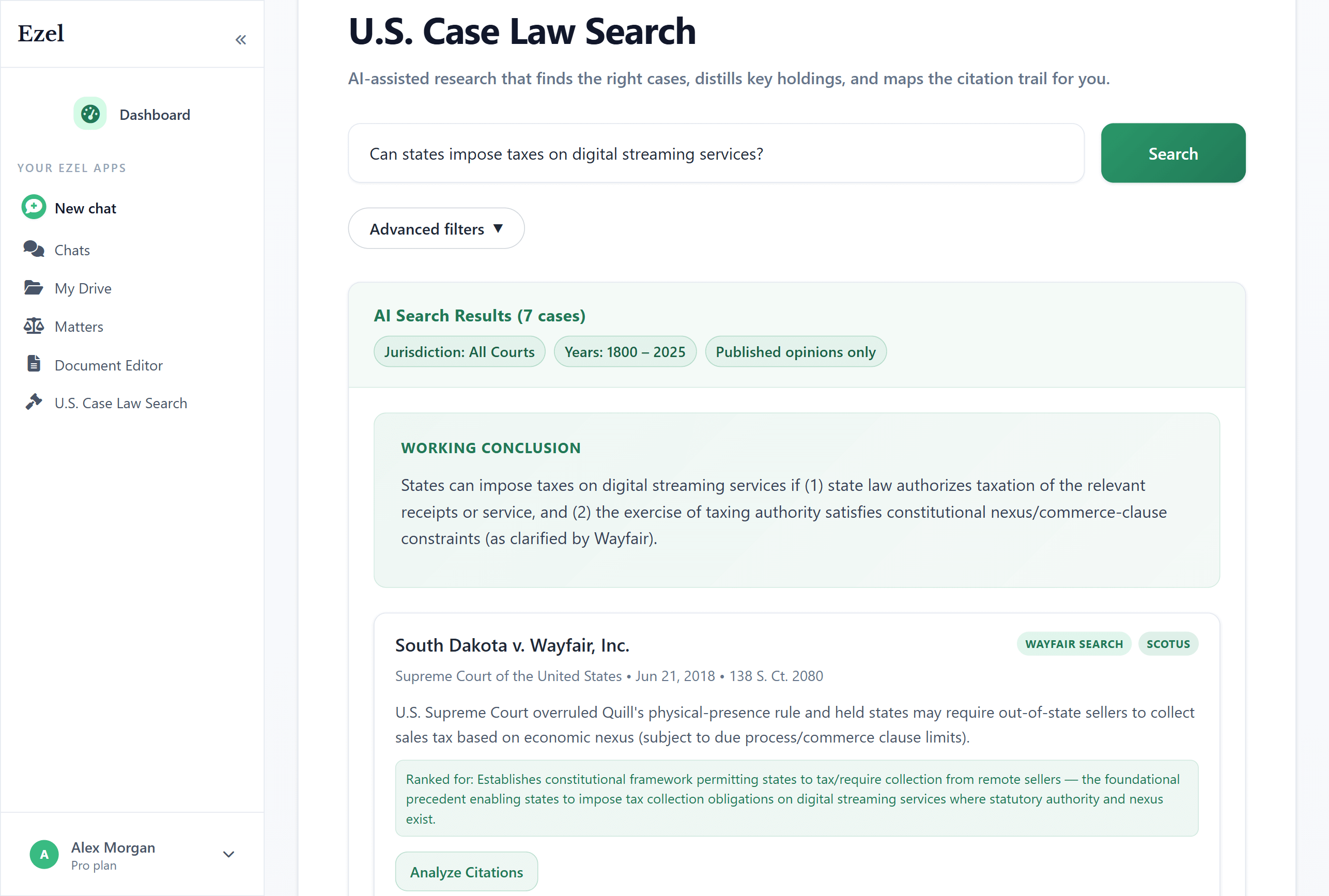

Search like you think

Describe your legal question in plain English. Filter by jurisdiction, date, and court level. Read full opinions without leaving Ezel.

- All 50 states plus federal courts

- Natural language queries - no boolean syntax

- Citation analysis and network exploration

- Copy quotes with automatic citation generation

Ready to transform your legal workflow?

Join legal teams using Ezel to draft documents, research case law, and organize matters — all in one workspace.